NOTE: THIS DOCUMENT IS OBSOLETE, PLEASE CHECK THE NEW VERSION: "Mathematics of the Discrete Fourier Transform (DFT), with Audio Applications --- Second Edition", by Julius O. Smith III, W3K Publishing, 2007, ISBN 978-0-9745607-4-8. - Copyright © 2017-09-28 by Julius O. Smith III - Center for Computer Research in Music and Acoustics (CCRMA), Stanford University

<< Previous page TOC INDEX Next page >>

Derivation of Taylor Series Expansion with RemainderWe repeat the derivation of the preceding section, but this time we treat the error term more carefully.

Again we want to approximate

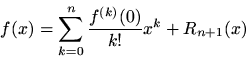

with an

th-order polynomial:

is the ''remainder term'' which we will no longer assume is zero.

Our problem is to find

so as to minimize

over some interval

containing

. There are many ''optimality criteria'' we could choose. The one that falls out naturally here is called ''Padé'' approximation. Padé approximation sets the error value and its first

derivatives to zero at a single chosen point, which we take to be

. Since all

''degrees of freedom'' in the polynomial coefficients

are used to set derivatives to zero at one point, the approximation is termed ''maximally flat'' at that point. Padé approximation comes up often in signal processing. For example, it is the sense in which Butterworth lowpass filters are optimal. (Their frequencyreponses are maximally flat at dc.) Also, Lagrange interpolation filters can be shown to maximally flat at dc in the frequency domain.

Setting

in the above polynomial approximation produces

where we have used the fact that the error is to be exactly zero at.

Differentiating the polynomial approximation and setting

gives

where we have used the fact that we also want the slopeof the error to be exactly zero at.

In the same way, we find

for, and the first

derivatives of the remainder term are all zero. Solving these relations for the desired constants yields the

th-order Taylor series expansion of

about the point

as before, but now we better understand the remainder term.From this derivation, it is clear that the approximation error (remainder term) is smallest in the vicinity of

. All degrees of freedomin the polynomial coefficients were devoted to minimizing the approximation error and its derivatives at

. As you might expect, the approximation error generally worsens as

gets farther away from

.

To obtain a more uniform approximation over some interval

in

, other kinds of error criteria may be employed. This is classically called ''economization of series,'' but nowadays we may simply call itpolynomial approximation under different error criteria. In Matlab, the function polyfit(x,y,n) will find the coefficients of a polynomial

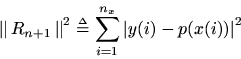

of degree n that fits the data y over the points x in a least-squares sense. That is, it minimizes

where.