NOTE: THIS DOCUMENT IS OBSOLETE, PLEASE CHECK THE NEW VERSION: "Mathematics of the Discrete Fourier Transform (DFT), with Audio Applications --- Second Edition", by Julius O. Smith III, W3K Publishing, 2007, ISBN 978-0-9745607-4-8. - Copyright © 2017-09-28 by Julius O. Smith III - Center for Computer Research in Music and Acoustics (CCRMA), Stanford University

<< Previous page TOC INDEX Next page >>

An Example of Changing Coordinates in 2D

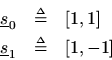

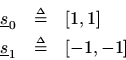

As a simple example, let's pick the following pair of new coordinate vectors in 2D

These happen to be the DFT sinusoids forhaving frequencies

(''dc'') and

(half the sampling rate). (The sampled complex sinusoids of the DFT reduce to real numbers only for

and

.) We already showed in an earlier example that these vectors are orthogonal. However, they are not orthonormal since the norm is

in each case. Let's try projecting

onto these vectors and seeing if we can reconstruct by summing the projections.

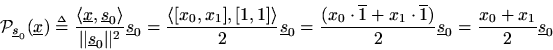

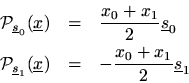

The projection of

onto

is by definition

Similarly, the projection ofonto

is

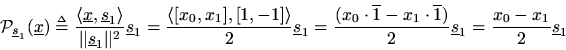

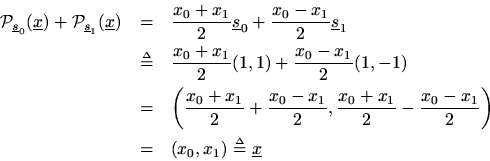

The sum of these projections is then

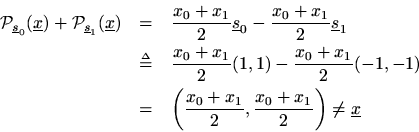

It worked!Now consider another example:

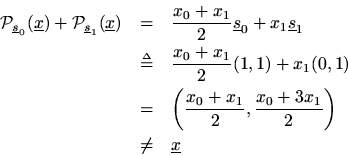

The projections ofonto these vectors are

The sum of the projections is

Something went wrong, but what? It turns out that a set ofvectors can be used to reconstruct an arbitrary vector in

from its projections only if they are linearly independent. In general, a set of vectors is linearly independent if none of them can be expressed as a linear combination of the others in the set. What this means intuituvely is that they must ''point in different directions'' in

space. In this example

so that they lie along the same line in

-space. As a result, they are linearly dependent: one is a linear combination of the other.

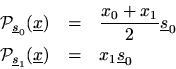

Consider this example:

These point in different directions, but they are not orthogonal. What happens now? The projections are

The sum of the projections is

So, even though the vectors are linearly independent, the sum of projections onto them does not reconstruct the original vector. Since the sum of projections worked in the orthogonal case, and since orthogonalityimplies linear independence, we might conjecture at this point that the sum of projections onto a set ofvectors will reconstruct the original vector only when the vector set is orthogonal, and this is true, as we will show.

It turns out that one can apply an orthogonalizing process, called Gram-Schmidt orthogonalization to any

linearly independent vectors in

so as to form an orthogonal set which will always work. This will be derived in Section 6.7.3.

Obviously, there must be at least

vectors in the set. Otherwise, there would be too few degrees of freedom to represent an arbitrary

. That is, given the

coordinates

of

(which are scale factors relative to the coordinate vectors

in

), we have to find at least

coefficients of projection (which we may think of as coordinates relative to new coordinate vectors

). If we compute only

coefficients, then we would be mapping a set of

complex numbers to

numbers. Such a mapping cannot be invertible in general. It also turns out

linearly independent vectors is always sufficient. The next section will summarize the general results along these lines.